A Primer on Data Centers

Welcome back to the Public Comps blog! Our goal is to provide high-quality, data-driven research on companies and industries. I also publish my research and other learnings regularly here.

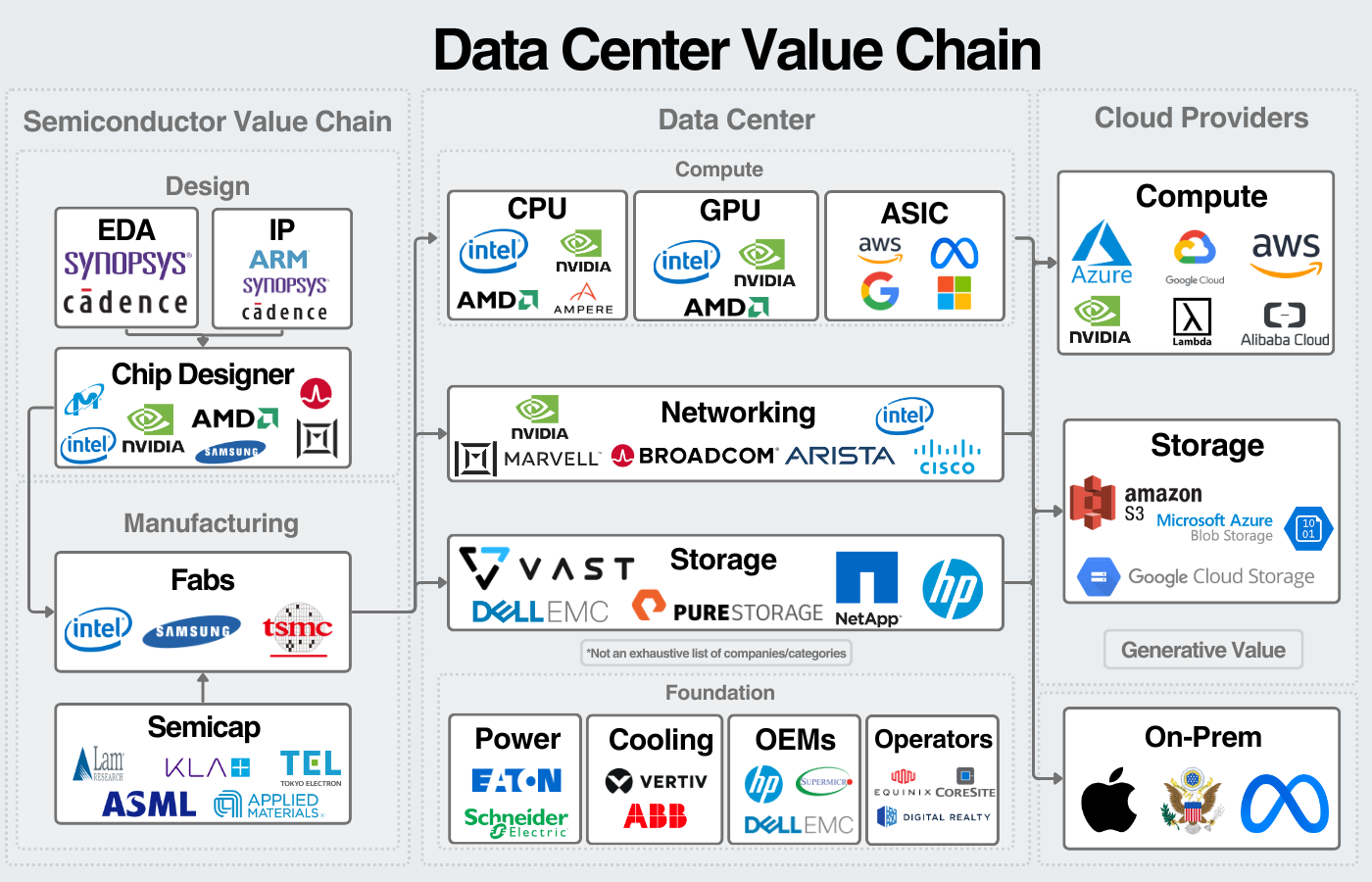

The data center today can be broken down into 3 categories for investors. At the foundational level, semiconductors enable most of the technology in a data center. In the middle, we have the data center itself which can be broken down into compute, networking, and storage; these are packaged in servers. Underlying that is the technology necessary to run the data center such as power and cooling technology, as well as 3rd party operators of the data centers. Finally, we have the cloud layer, which provides the abstraction layer on which to develop technology.

I won’t be discussing the semi value chain here, but you can find my primer here. Additionally, SemiAnalysis and Fabricated Knowledge are two of the best semi publications to follow.

With that, we can start to break down the data center.

Visualizing the Data Center

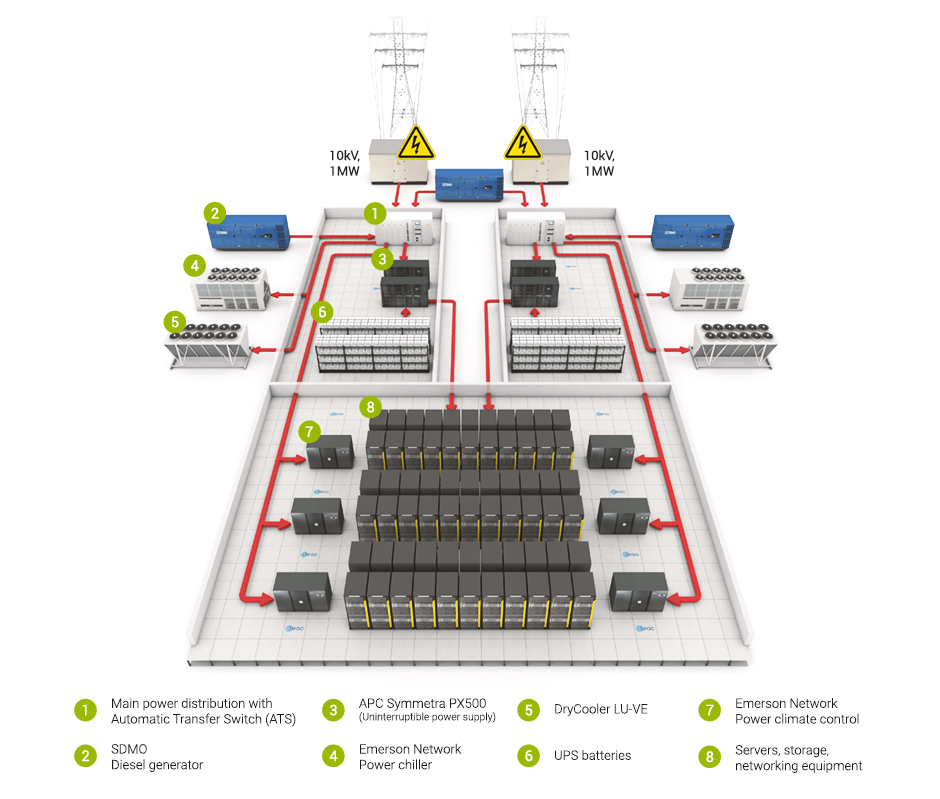

It’s valuable to visualize the scale of data centers, here’s a pretty good image of how they’re structured:

These puppies are massive too, some of the largest hyperscaler data centers are more than a million square feet, or about 25 football fields. The infrastructure required to build a data center includes the land, construction, transformers, power management, and cooling technology.

Jensen Huang has previously estimated that ~50% of the cost of a data center goes to that infrastructure and the other 50% goes to compute, networking, and storage.

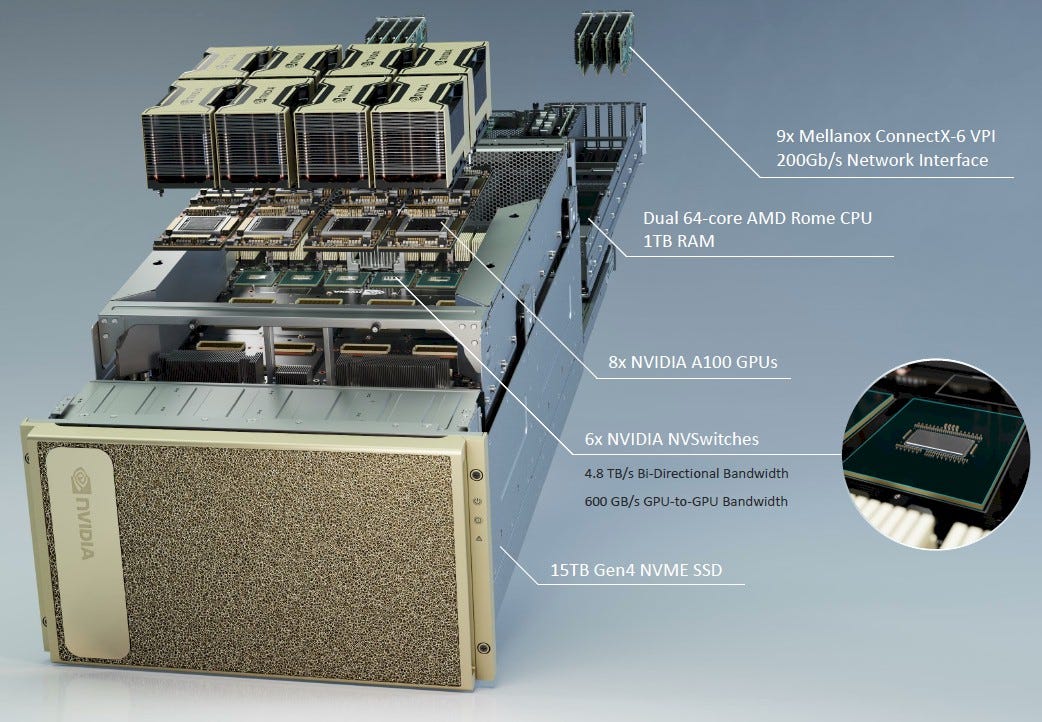

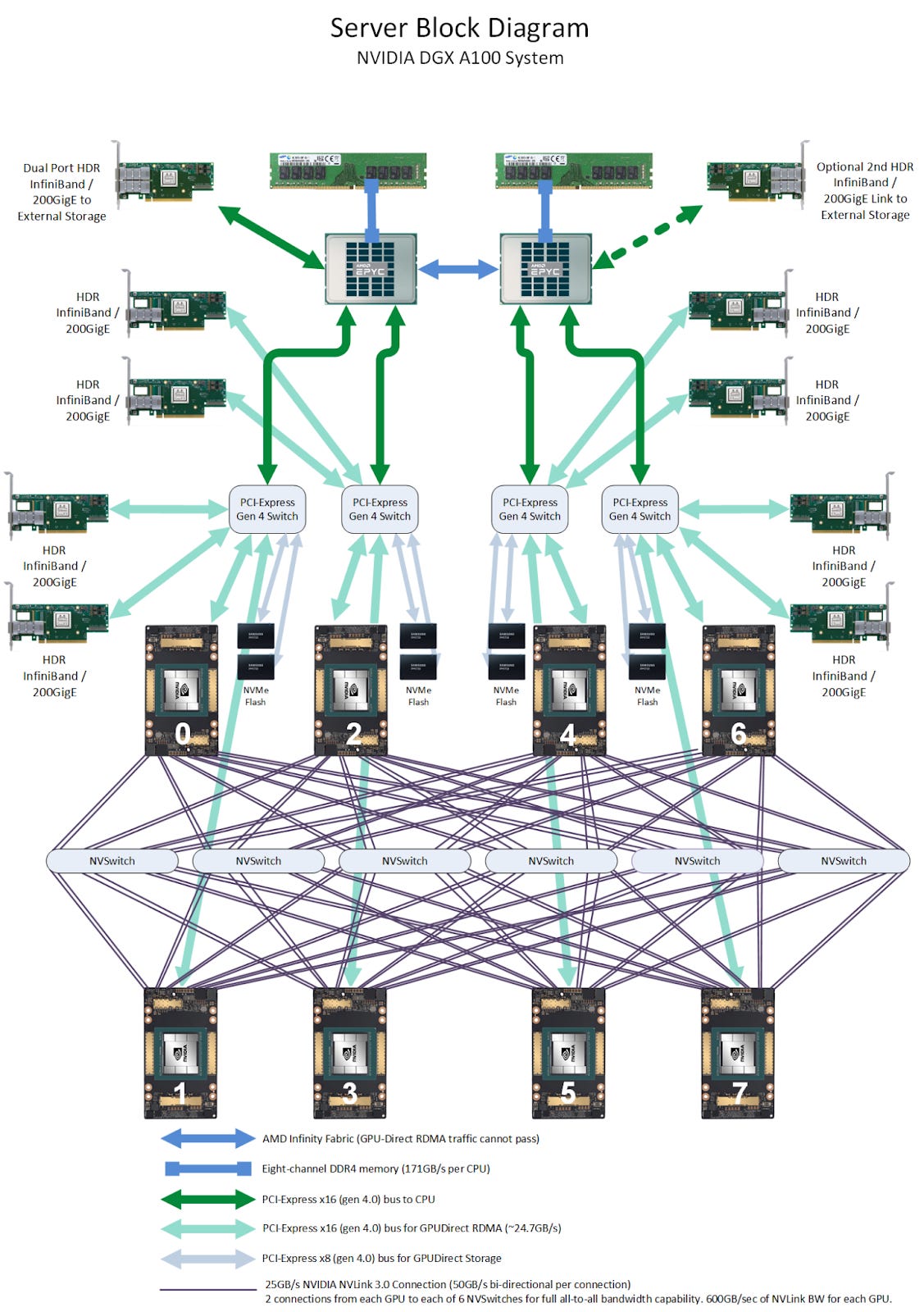

All of this equipment is to power the servers that house the compute and storage power. Here’s an example of a Nvidia DGX A100 server:

These servers are stored in racks with other servers, networking equipment, and storage. Then there are hundreds to thousands of these racks in a data center:

It’s a pretty incredible testament to human ingenuity to develop a world of technology hosted on a few thousand buildings worth of computers (but I digress).

Let’s start to break down the markets in the data center industry.

1. Compute

Compute power is the heart of the data center.

Compute Basics

Compute refers to the processing power and memory required to run applications on servers. Depending on the type of workload, the server will use different types of chips, typically CPUs or GPUs. CPUs are the central processors of computers; they are good at handling complex operations and act as the main interface with software. GPUs excel in parallel processing, completing many simple operations at once. This is why they work so well for their original purpose in graphics, and why they’re well-designed for AI workloads which are made up of many small calculations.

Other types of chips such as application-specific integrated circuits and Field programmable gate arrays are also used, but much less frequently. ASICs are custom-made chips for specific workloads, such as Google’s AI accelerator TPUs. Logically, we’ll continue to see ASICs used more frequently in cloud data centers as minor efficiency gains can lead to significant cost savings for the hyperscalers. FPGAs can be reconfigured to implement different operations; they aren’t widely used in data centers now but are promising for the future.

CPU Market

The data center CPU market has historically been dominated by Intel, with AMD in a distant second. Now, AMD is posing more competition as well as Arm-based CPUs from Ampere, Amazon, Nvidia, and others.

Two major trends are playing out in the CPU market.

1. AMD taking share

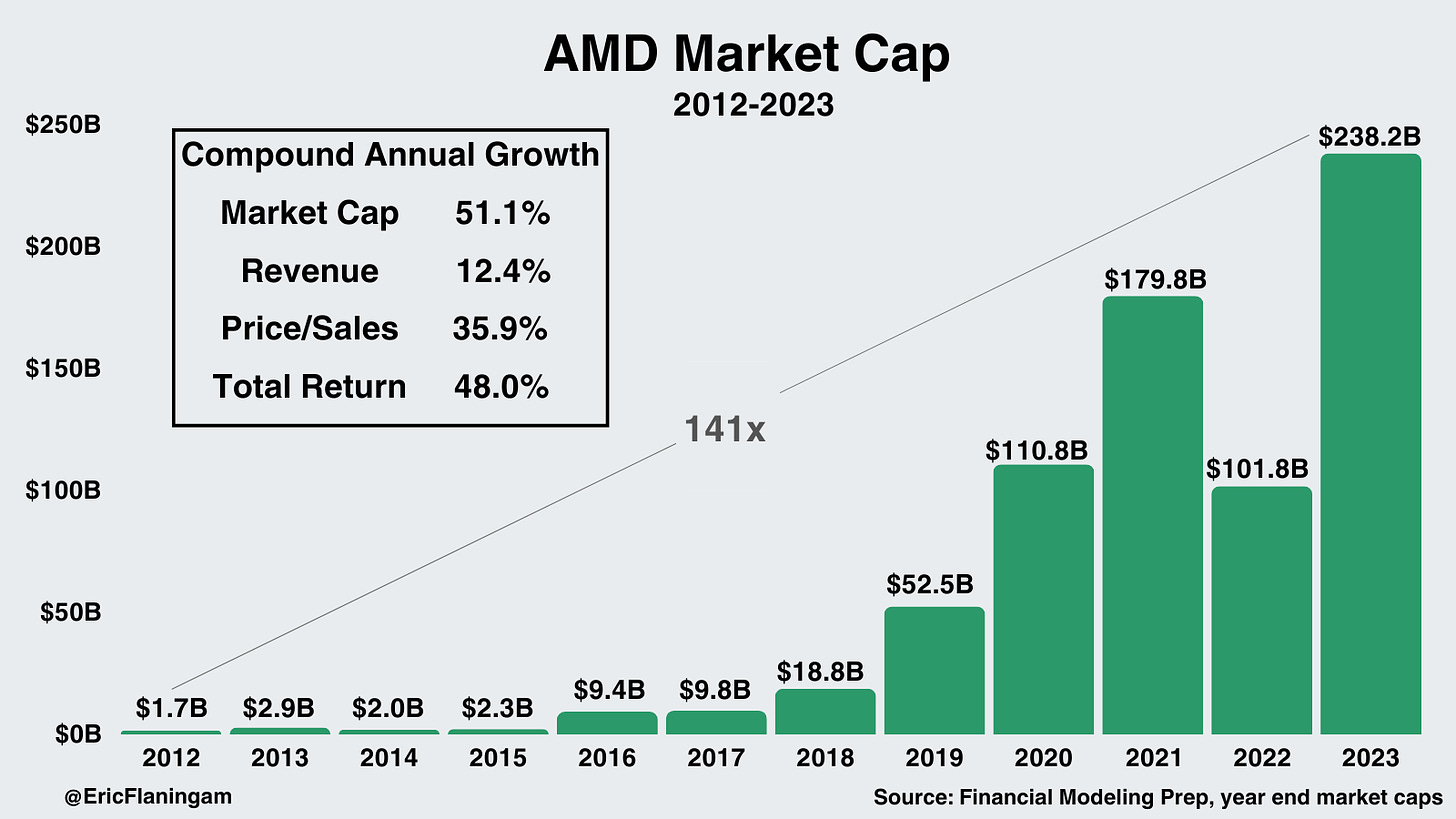

First, AMD has been taking share from Intel in x86 processors. In large part, this has been due to Lisa Su’s leadership. Lisa took over in 2014, and we can see their results over the last decade have been unbelievable:

Their EPYC line of data center CPUs continues to be successful, with their latest generation being the 4th-Gen EPYC Genoa.

Intel, meanwhile, is in the process of attempting to reinvent its business. They aspire to recapture the manufacturing of the world’s most advanced process nodes from TSMC. A massive effort is underway to build out Intel Foundry Services. The future of that effort is unclear. Intel does have a highly challenging path to defend CPU market share, build out Intel Foundry Services, and attempt to take GPU market share. According to Jay Goldberg, a semiconductor consultant,

“AMD is beating Intel on all the metrics that matter, and until and unless Intel can fix its manufacturing, find some new way to manufacture things, they will continue to do that.”

Now, I’m not here to comment on whether Intel or AMD is a better company. The comparison however is an interesting lesson on expectations. AMD’s expectations couldn’t have been lower when Lisa Su took over as CEO. Paradoxically, this significantly improves the risk profile of an investment. As Howard Marks puts it,” The greatest risk doesn’t come from low quality or high volatility. It comes from paying prices that are too high.”

2. Arm-based servers taking share

The other trend playing out is with Arm-based processors. For years, Arm has been the preferred architecture for smartphones due to its efficiency. Recently, that trend has been carrying over to the data center as well.

Amazon leads this movement. It first released its Graviton processor in 2018. Since then, it’s grown to an estimated ~3-4% of data center CPU shipments. Ampere Computing, one of the newer chip companies on the block, has also gained a respectable amount of market share. Additionally, Nvidia recently released its first data center CPU, the Grace line of chips. Other companies like Microsoft are making Arm-based CPUs as well.

This trend is to the detriment of both Intel and AMD and should continue to be watched as the move toward custom silicon continues.

GPU Market

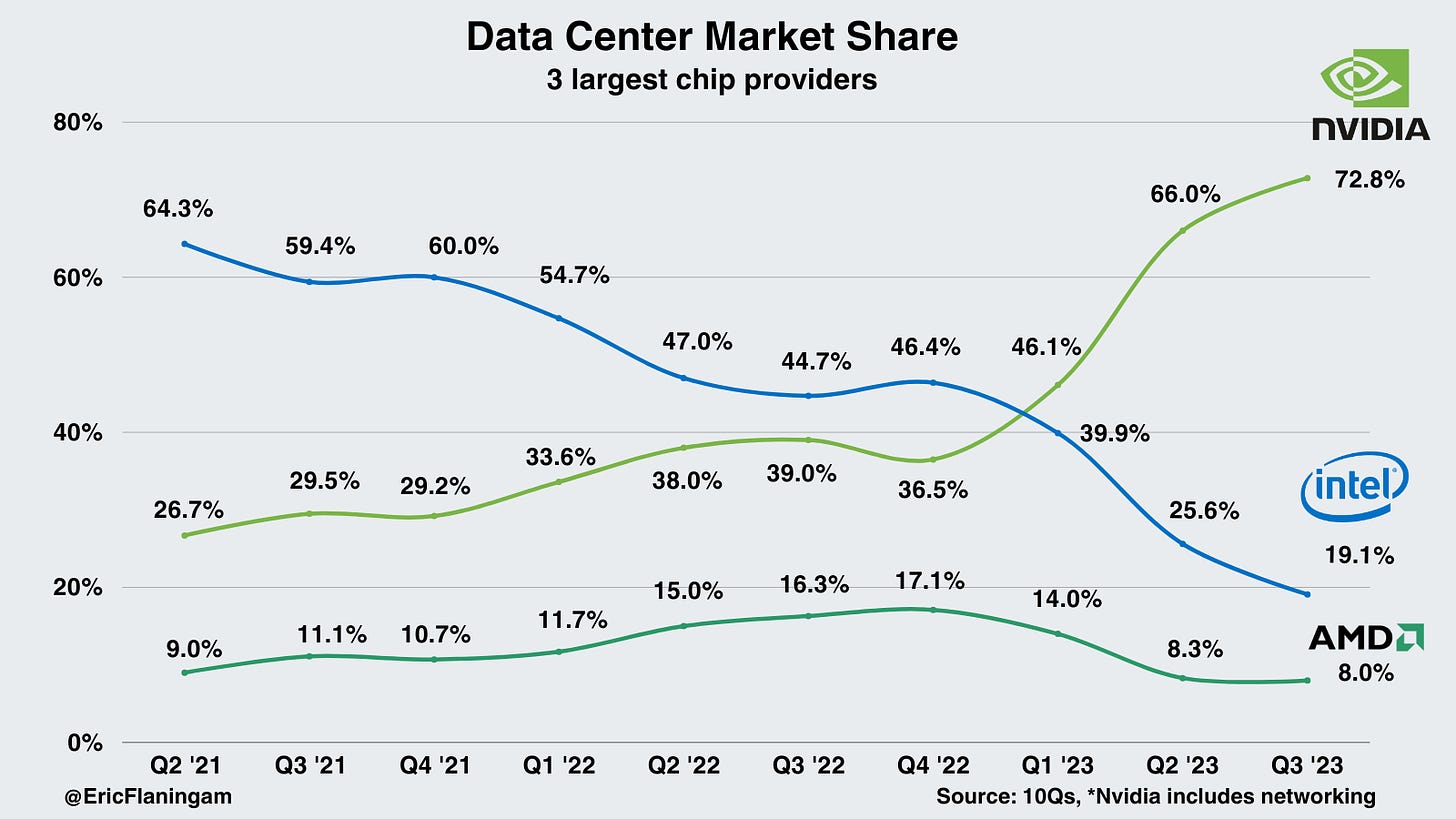

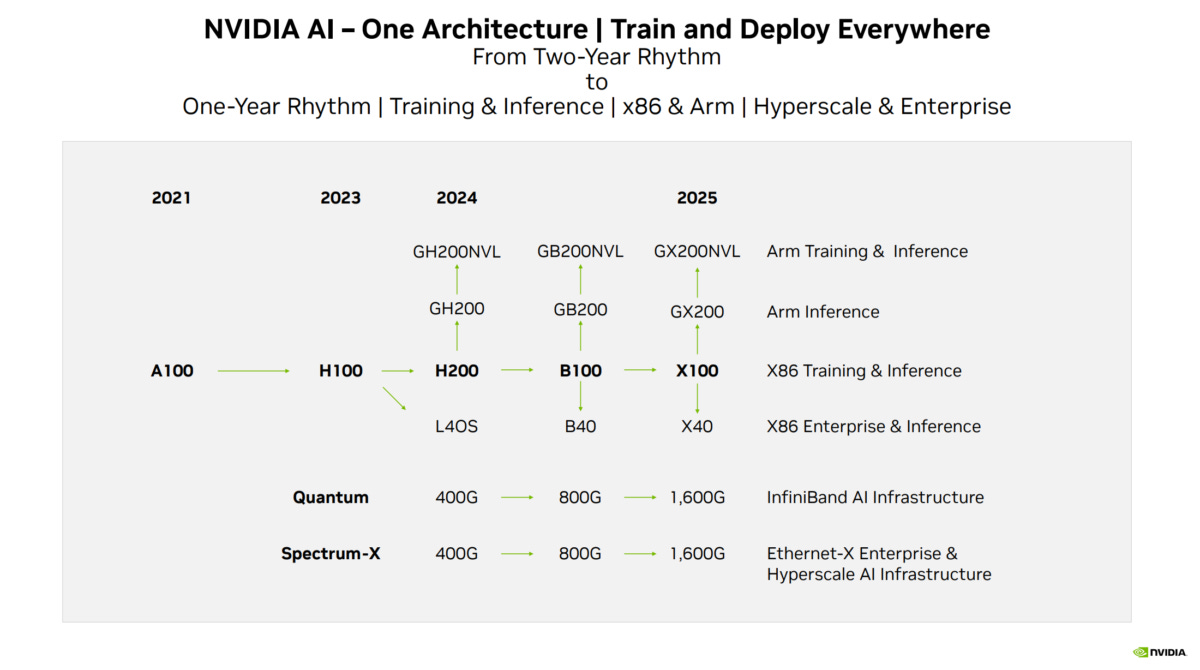

The GPU market is currently dominated by Nvidia. They’re aggressively investing to keep it that way.

At this point, the main competitor for data center GPUs is AMD’s MI300. AMD now expects its data center GPUs to generate $3.5B in revenue in 2024. Intel also offers a data center GPU, which analysts estimate will contribute $850M in revenue next year.

Nvidia brought in $18.12B last quarter, and data center revenue last quarter was $14.51B. Removing networking, data center revenue was $11.9B, most of which is from GPUs. An estimate from Wells Fargo projects $46B in GPU revenue for Nvidia next year.

ASIC Market - specifically AI accelerators

The other competitor for GPUs is AI accelerators which could win workloads from Nvidia in the long run. The most likely competition comes from big tech, as these four hyperscalers have their own AI accelerators:

- Google: Google’s AI accelerators are called Tensor Processing Units (TPUs). TPUs have been in production since 2016, are supported by Broadcom, and are fabbed by TSMC.

- Amazon: Trainium became generally available in October 2022, and Inferentia became GA in 2021. TSMC also fabs Amazon’s chips.

- Meta: The Meta Training and Inference Accelerator, or MTIA was designed in 2020 on TSMC’s 7nm process.

- Microsoft: Microsoft Maia was announced in 2023, is expected to be available in 2024, and is fabbed on TSMC’s 5nm process.

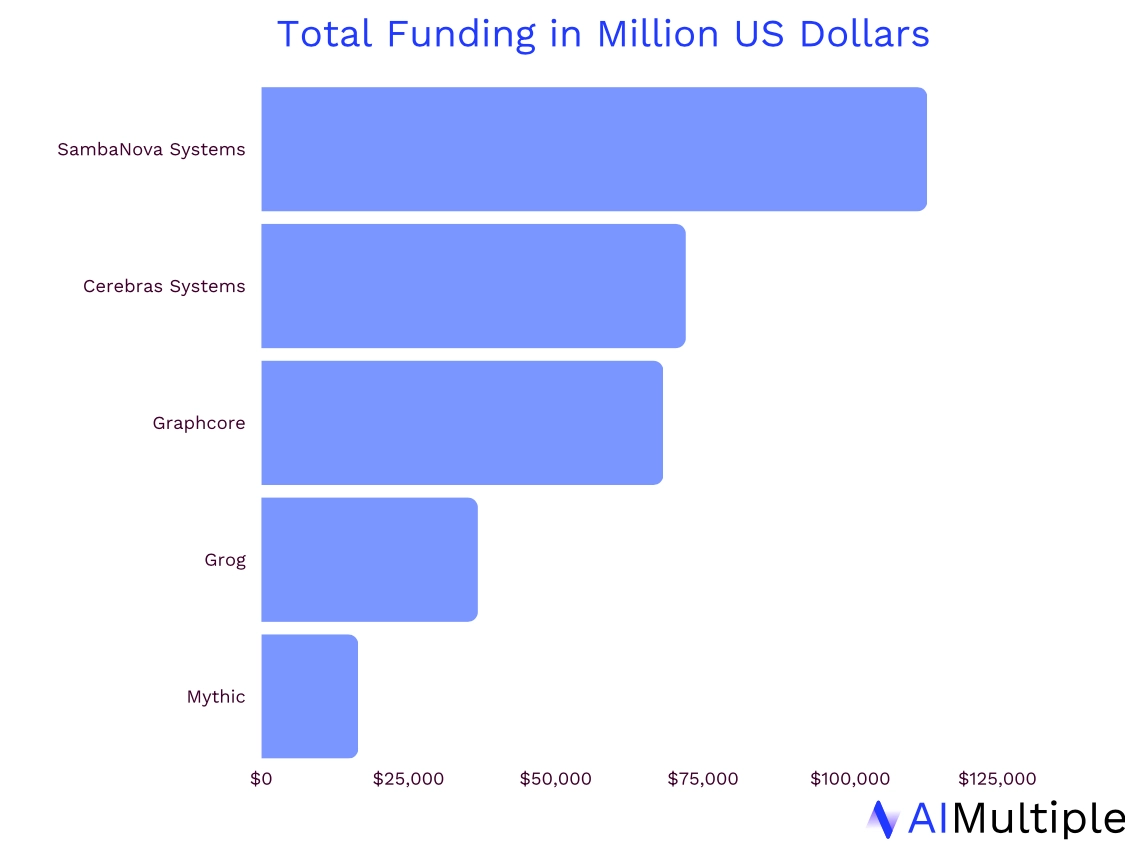

Finally, startups could pose challenges too, although this seems unlikely in the short-term due to leading-edge fab capacity. AIMultiple put together funding for some of the largest AI chip startups, and this will likely be a hot VC target market for years:

2. Data Center Networking

Networking is the second major technology I’ll cover. Networking enables the flow of data between servers, storage, and applications. This section will cover the important concepts/technologies in networking, ethernet vs InfiniBand, and the major players in the market.

Networking basics

The 3 most basic networking technologies are switches, routers, and cables to connect them. There are also several semiconductors for the processing of data that I’ll touch on later.

- Switches connect servers, storage, and other networking equipment; they provide the data flow between these devices.

- Routers connect different networks and sub-networks. As data flows in and out of the data center, routers process the flow of data so it goes to the right place.

- Switches facilitate communication within the same network. Routers provide the connection to other networks.

- Switches and routers can both be Ethernet or InfiniBand. Ethernet is more widely adopted while InfiniBand is preferred in high-performance computing.

- Fiber-optic and cable: these are the physical cables connecting the routers, switches, and data centers to the rest of the world.

These technologies fundamentally make up the majority of networking in the data center. There are additional technologies like firewalls, but I wanted to focus on the enablers of high-performance computing. All the different networks are then organized through network topologies such as LAN, MAN, and WAN but I also won’t dive into those here.

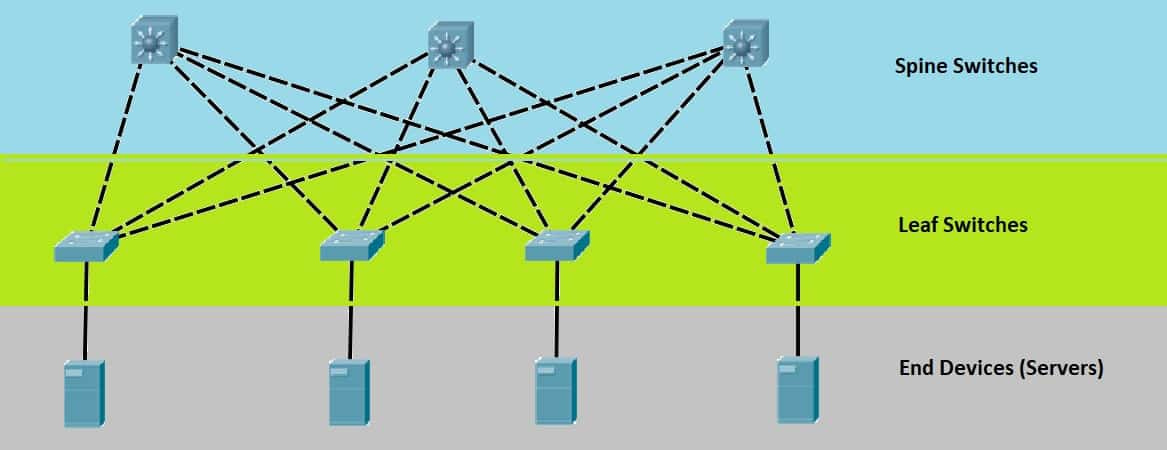

The popular model for data center networking is the spine-leaf model:

Each rack will have switches at the top of the rack (leaf switches). Those switches then each connect to several bigger switches that connect the network. An important concept is that each leaf connects to each spine. So, if one server goes down, traffic can be routed to other servers; thereby not losing service.

Then within servers, networking occurs as well. For example, in a Nvidia DGX A100, 8 GPUs are clustered together by NV switches to distribute computing:

Finally, we should touch on InfiniBand vs Ethernet, the two major networking technologies. Fundamentally, ethernet is slower, cheaper, and more widely adopted. Infiniband is faster, more expensive, and dominant in high-performance computing.

Ethernet was the dominant networking technology everywhere throughout the 2000s. In the early 2010s, Infiniband passed ethernet as the dominant technology for high-performance computing. As high-performance computing needs grew, so did Infiniband’s importance. Ethernet is still the standard for networking everywhere, but Infiniband is the standard in high-performance computing. InfiniBand typically is used to connect multiple servers or servers and storage. This reduces latency when running workloads that are processing huge amounts of data, like training LLMs.

Networking Market

There are three leading players in the networking equipment market: Cisco, Arista, and Nvidia (including Mellanox pre-acquisition).

Two major trends are playing out in data center networking:

1. Cisco vs Arista in Ethernet

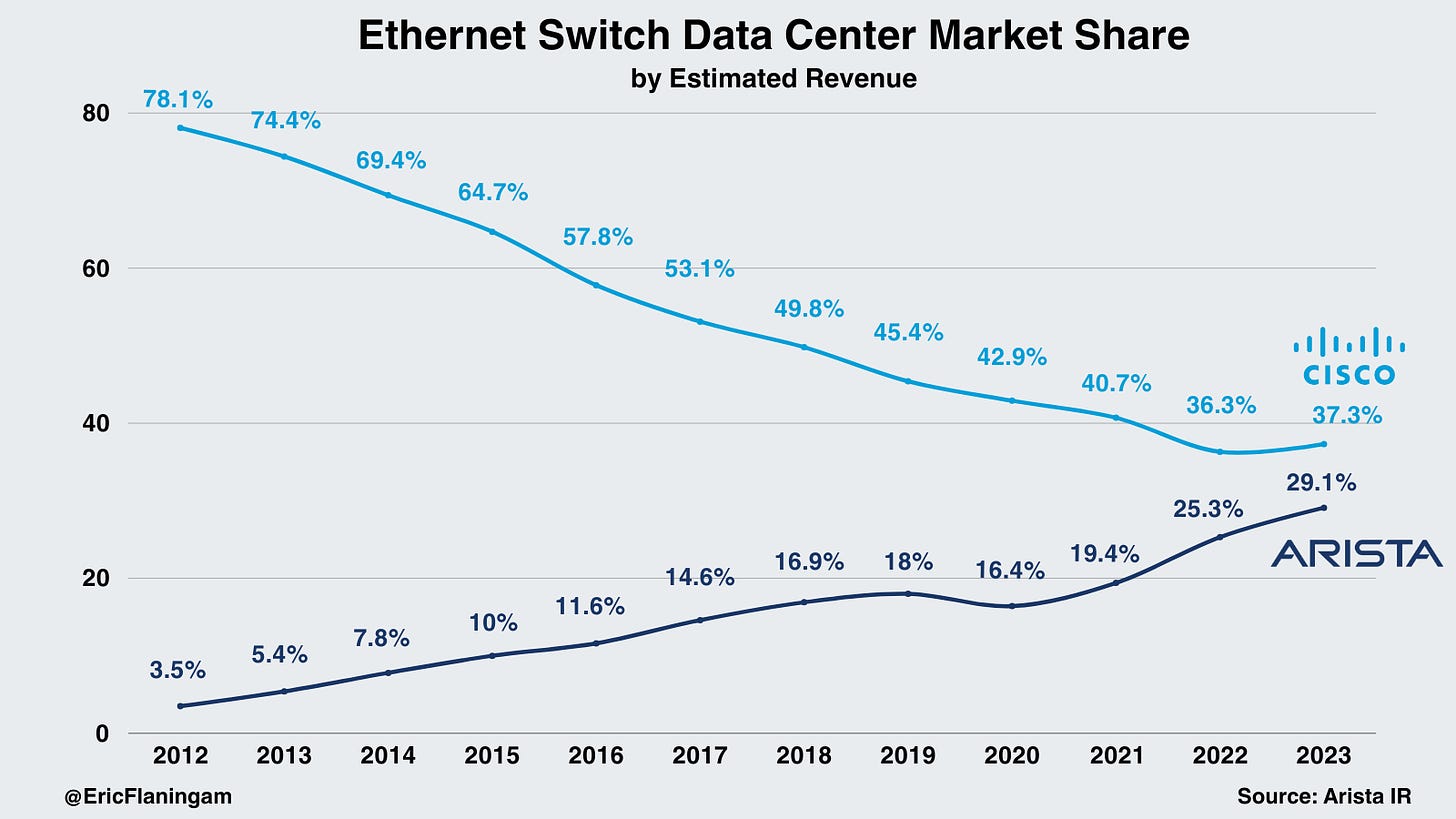

Arista has steadily taken share from Cisco in the data center over the last decade. This has primarily come from Arista’s concentration on high-performance computing cloud providers. In the Q3 earnings call, management noted that 40% of Arista’s revenue will come from “cloud and AI” Titans:

This new cloud and AI titan sector is projected to represent greater than 40% of our total revenue mix due to the favorable AI investments expected in the future.

As we’ve seen in semiconductors, as technology gets increasingly complex, verticalization can become a risk instead of an asset. It’s a similar situation we’ve seen with Cisco in the data center over the last decade. While they’ve been focused on managing a $70B networking/security/software business, Arista has focused purely on data center networking. With large customers like Microsoft funding that focus, they’ve been a brutal competitor for Cisco.

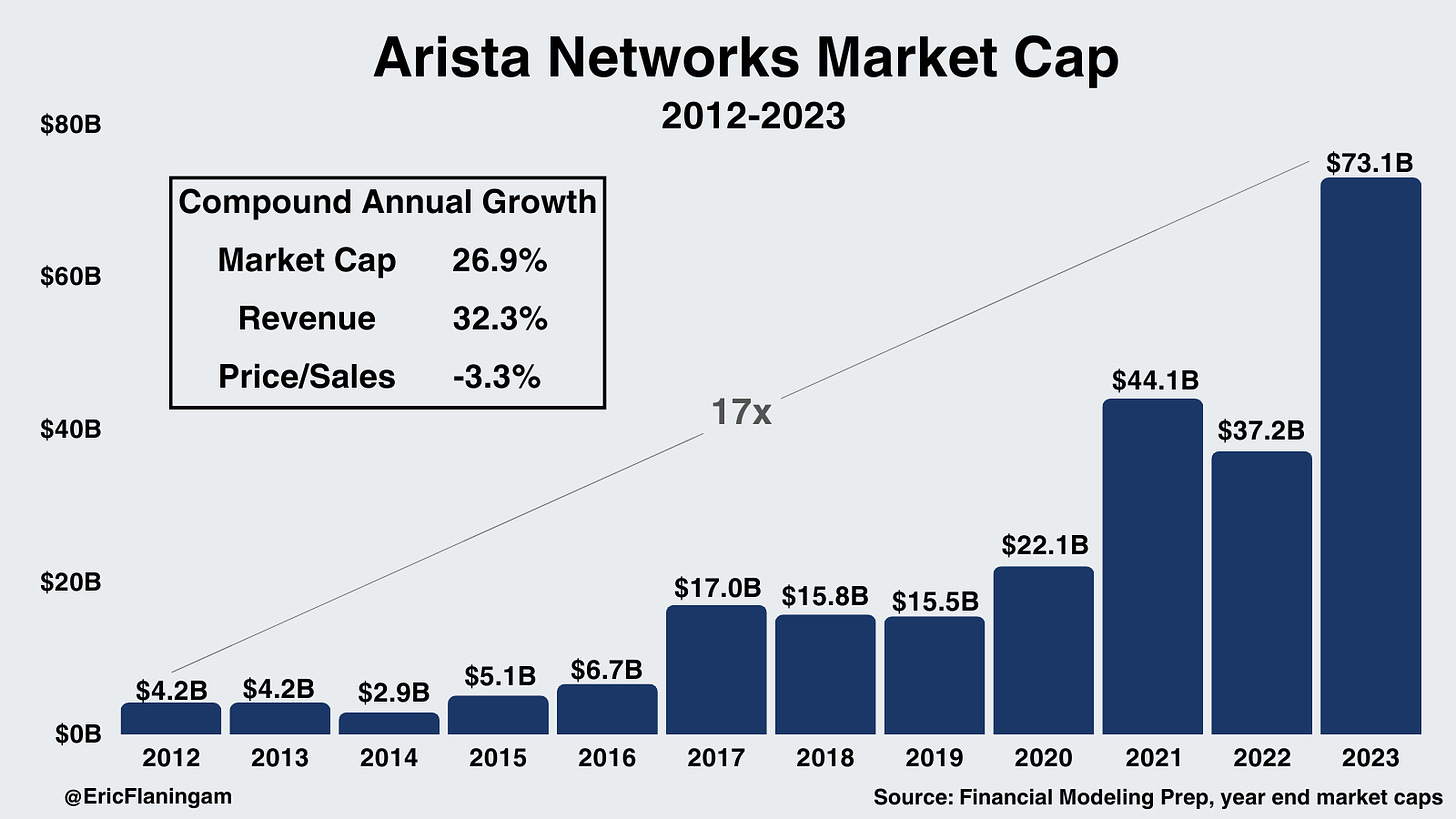

With their execution, they’ve grown their revenue at 32.3% CAGR and their market cap at a 26.9% CAGR.

2. Infiniband vs Ethernet

Infiniband vs Ethernet is a fascinating debate as ethernet has been the networking standard for decades. Infiniband has gained a foothold in high-performance computing and is now prevalent in the data center.

To summarize the current state: neither Infiniband nor Ethernet is perfect for AI. Both will go through increased development to become the standard for AI. For the near-term future, the packaging of Nvidia with Infiniband will be the standard. However, going over multiple years, where we see more custom chips, custom system architectures, and ethernet development; it’s challenging to predict an end-state for the market.

Arista’s CEO provided some insights last year on the debate (by the way, this interview is worth reading for those interested in data center infrastructure). Summarizing the main points:

- First, neither Infiniband nor ethernet is perfect for AI; both will need to improve to service AI’s needs.

- She currently sees Infiniband as the technology connecting GPUs, and ethernet as being one layer out - connecting the GPU clusters to the rest of the data center.

- She estimates Infiniband is about 2/3rds of the AI market today, and that flipping to ⅔ ethernet in the coming years.

- Ultimately, she sees the Ultra Ethernet Consortium as the driving force in the industry to get Ethernet on par with Infiniband.

Now, I’m not going to provide any opinion on the future of this debate, as I’d likely make a fool of myself (however, if any readers are well-versed in this realm, I’d love to hear your thoughts).

A reasonable conclusion is that this outcome will be highly challenging to predict, and should be considered as a piece of misanalysis risk when studying any of these companies.

Nvidia Networking

Networking has been one of the key stories in Nvidia’s historic 2023. Nvidia acquired Mellanox, the leader in Infiniband networking, in 2020. This looks like it may go down as an all-time great acquisition with Nvidia’s networking business now multiple times larger than the size of Mellanox’s just 3.5 years later.

Nvidia has been able to integrate networking into its products to sell integrated platforms like the DGX supercomputer shown earlier. It gives them a near monopoly on data center GPUs and InfiniBand networking.

Bringing together the whole platform, Nvidia offers the entire AI stack from software to silicon:

To provide some additional color, next platform estimates the size of Nvidia’s current networking business:

When we put what Nvidia said on the call through our spreadsheet magic, we reckon that the Compute part of Compute & Networking accounted for $11.94 billion in sales, up 4.24X compared to a year ago, and the Networking part accounted for $2.58 billion in sales, up by 2.55X year on year. Huang said that InfiniBand networking sales rose by a factor of 5X in the quarter, and we think that works out to $2.14 billion and represents 83.1 percent of all networking. The Ethernet/Other portion of the Networking business accounted for $435 million and declined by 25.2 percent.

They also recently released Spectrum-X ethernet platform designed for AI ethernet workloads (so they also clearly see the future for ethernet & AI). Their ethernet business is still relatively small but provides them with yet another avenue of expansion to dominate the data center.

Networking Silicon

Finally, I wanted to touch on networking semiconductors. First, some more terminology:

- NICs: A Network Interface Card is the semiconductor that communicates with the switches and passes the data on to the CPU for processing.

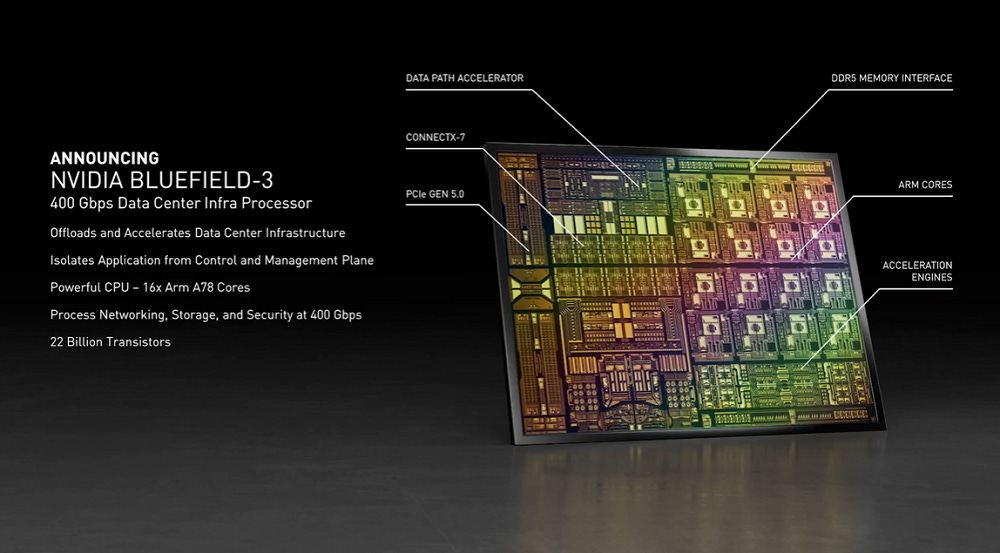

- SmartNIC: A SmartNIC takes it a step further and removes some of the processing workload from the CPU. The SmartNIC can then communicate directly with the GPU.

- Data Processing Units (DPUs): A DPU then takes it (you guessed it) another step further, integrating more capabilities onto the chip. The goal with the DPU is to improve processing efficiency for AI workloads and remove the need for processing from the CPU.

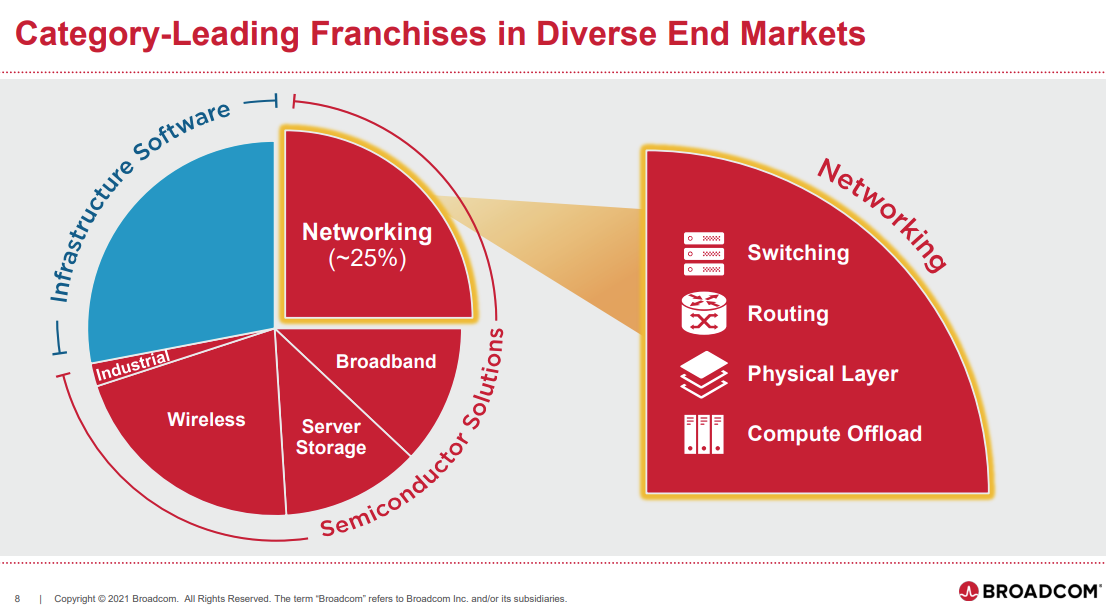

Broadcom and Marvell are the two largest semiconductor providers in the networking space (outside of Nvidia). Broadcom’s networking business is massive and makes up ~25% of their revenue:

We can get an idea of the size of that from their most recent earnings call:

Q4 networking revenue of $3.1 billion grew 23% year-on-year, representing 42% of our semiconductor revenue. In fiscal '23, networking revenue grew 21% year-on-year to $10.8 billion. If we exclude the AI accelerators, networking connectivity represented about $8 billion, and this is purely silicon.

With $11B in revenue in the last 12 months, Broadcom is the second largest networking business in the world behind only Cisco.

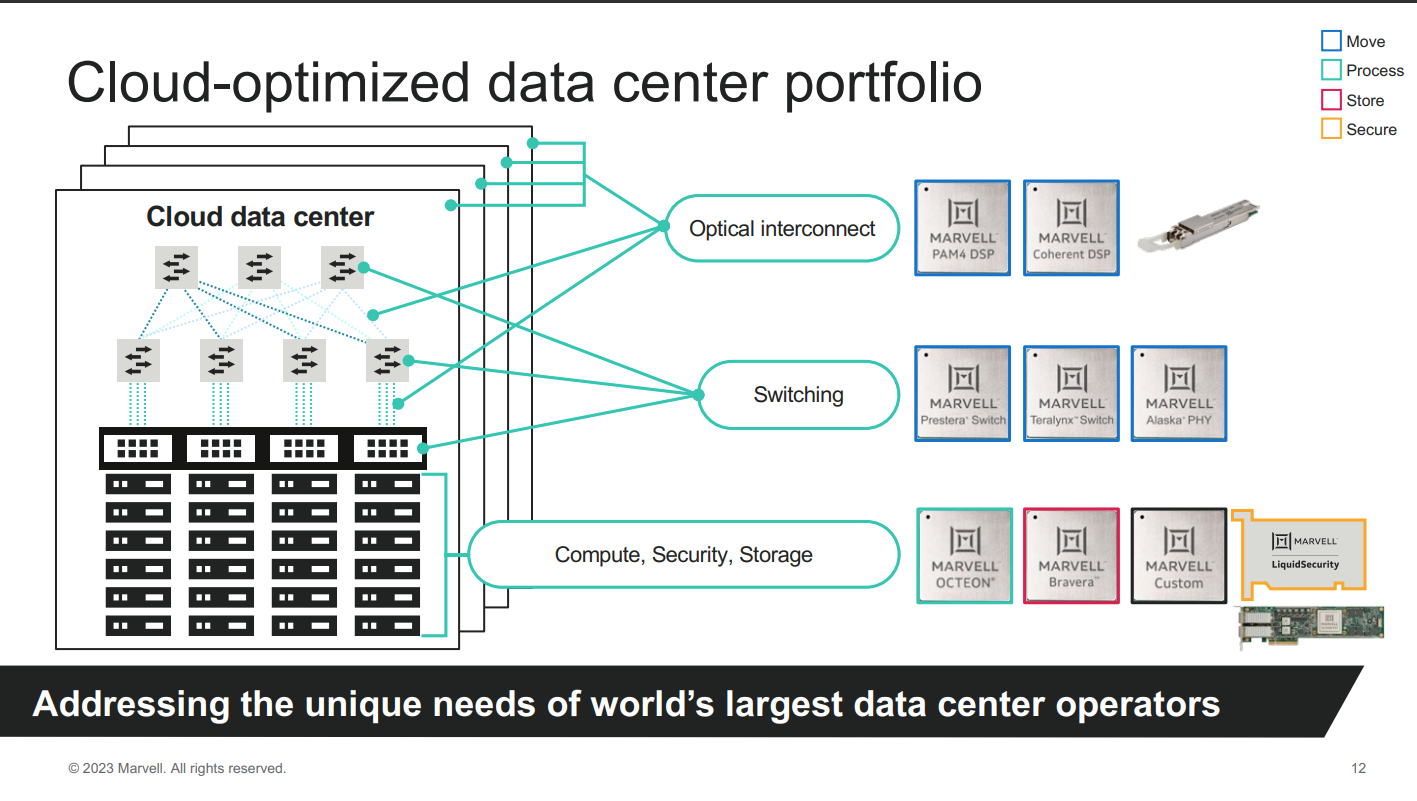

Marvell also designs chips for storage and networking, their goal is to “build the future of data infrastructure.” They don’t break out revenue specifically for data center networking; between their data center and networking business, they generated ~$3.2B in the last twelve months.

Below, their networking chips are the 5 chips in blue:

Both Broadcom and Marvell provide strong exposure to the data center.

3. Storage

Storage Basics

Storage is the 3rd major segment for data center computing. Within data center storage, two primary options exist: flash and disk. Flash, represented by solid-state drives or SSDs, is the go-to choice for high-performance computing workloads demanding fast data access with high bandwidth and low latency. On the flip side, disks (Hard Disk Drives - HDDs) offer higher capacity but come with lower bandwidth and higher latency. For long-term storage needs, disks remain the preferred technology.

There are a few storage architectures within the data center:

- Direct Attached Storage (DAS) - Storage is attached directly to the server, only that server can access the storage.

- Storage Area Network (SAN) - A network that allows multiple servers to access pooled storage.

- Network Attached Storage (NAS) - Storage connected to a network

- Software Defined Storage (SDS) - A virtualization layer that pools physical storage, and offers additional flexibility and scalable storage.

Other beneficiaries

For the sake of brevity, I won’t go into detail on the following sections but I want to call out the other critical components of the data center:

1. Servers

Servers integrate the CPUs, GPUs, networking, memory, and cooling into one unit.

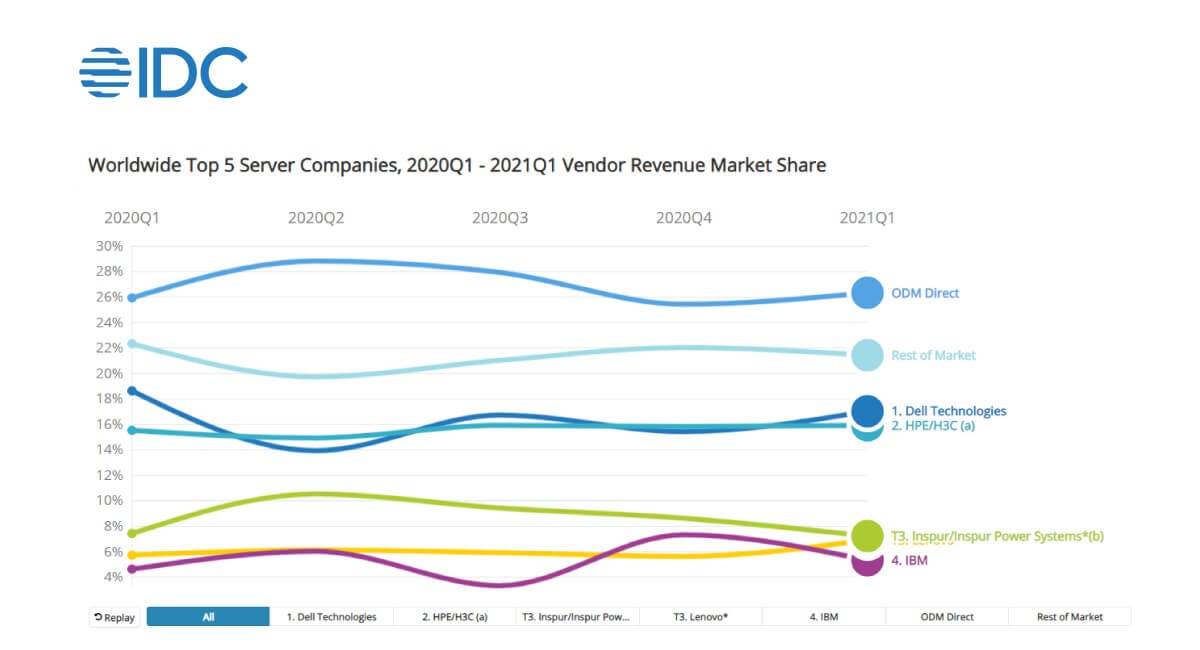

Here’s older data but there haven’t been massive changes in the data:

Original design manufacturers (ODMs) are responsible for the design and manufacturing of a product, that other companies will buy and rebrand. An OEM will then buy that hardware, and focus on sales, marketing, and support for those products. ODM direct in this chart is when ODMs sell directly to companies like the hyperscalers.

You might’ve heard the recent hype around SMCI, which is primarily an OEM but does some ODM work as well. They are an important OEM for Nvidia which has led to their explosive rise over the last year.

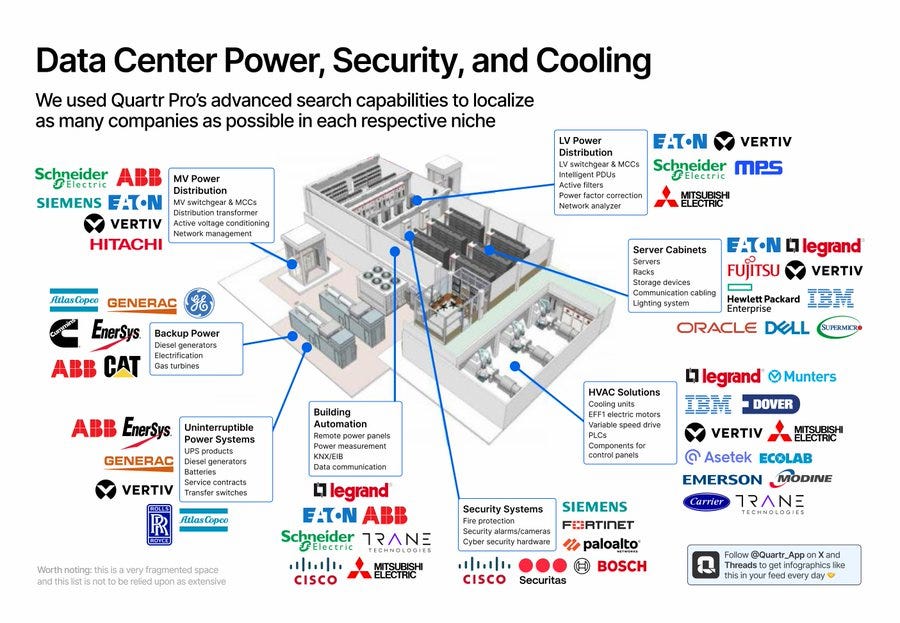

2. Power Management/Cooling

It’s estimated that 50-60% of the costs of a data center come from compute, networking, and storage. The other 40-50% comes from power, cooling, security, operators, construction, real estate, etc.

The largest expense of that 40-50% goes to power management which includes power distribution, generators, and uninterruptible power systems.

The leaders here are the large industrial companies such as Schneider Electric, ABB, Eaton, and Siemens. Here’s a good graphic from Quartr visualizing it:

Data center cooling includes chillers, computer room air conditioners (CRAC), computer room air handlers (CRAH), and HVAC units.

Market leaders include Vertiv, Stulz, Schneider Electric, and Airedale International.

3. Operators

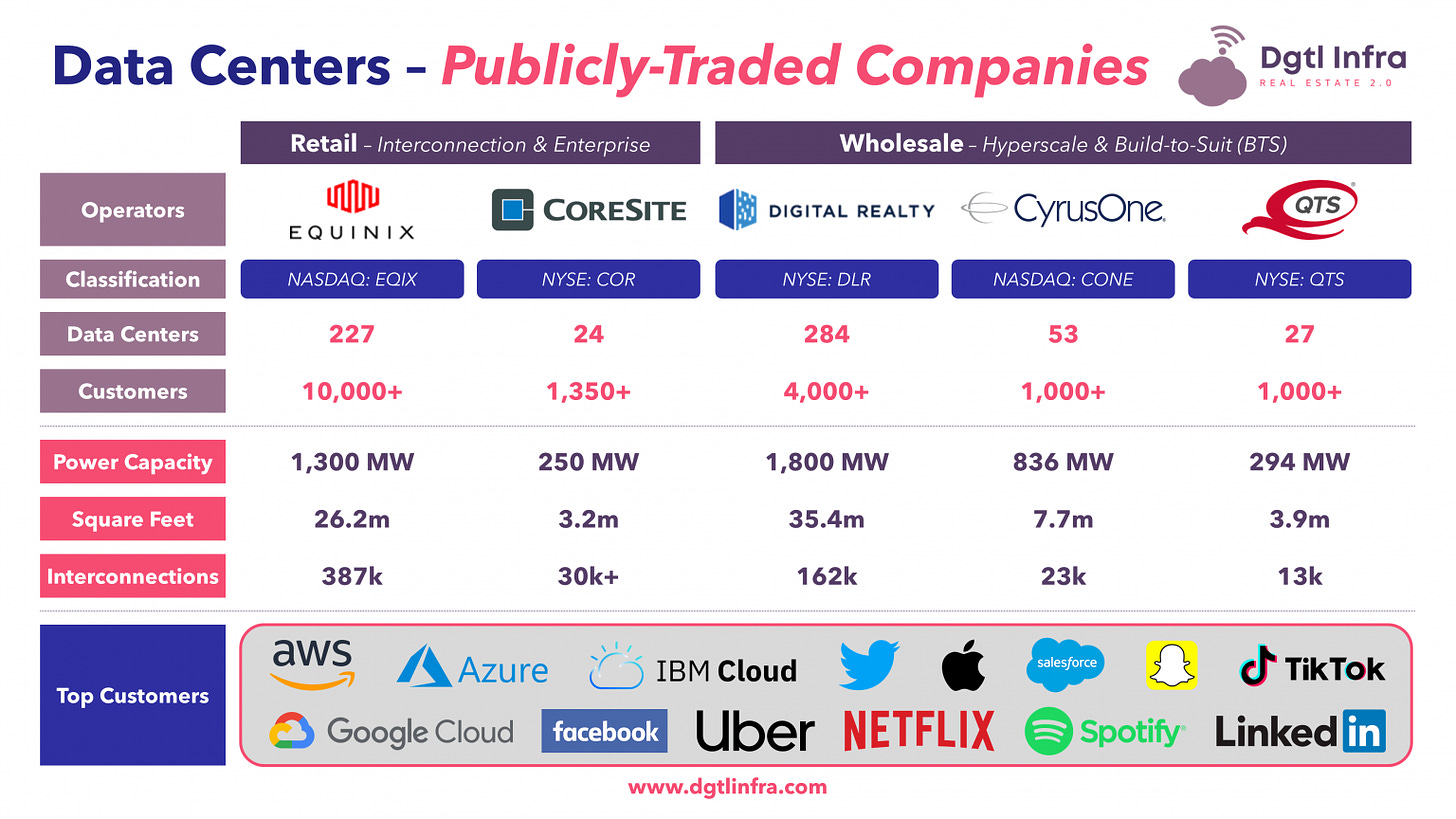

Operators are 3rd party companies that build, manage, and host physical servers for other companies. A popular model is colocation where Equinix will offer space for companies to place servers and networking equipment.

A good visual here:

4. Construction/Real Estate/Electricity/Security

Several other pieces of the value chain make data centers possible. Land must be bought and developed. The data centers use massive amounts of electricity. Also, data centers must be secure since they contain some of the most important information in the world.

Bringing it All Together

Finally, I like to finish these primers with a conclusion on how I see the industry. Unlike the data platform space, where much of the opportunity is for private investors and VCs, data centers provide an excellent way for public investors to invest in tech.

The customer concentration and barriers to entry of data centers lend themselves much more to large, public companies. On the contrary, this appears to be a challenging space for startups that would need to be experienced and well-funded to break into this market.

There are a great number of ways to invest in data centers: semiconductors, networking, storage, cloud providers (investing in demand instead of supply), real estate, electricity, power management, and cooling. All of these areas are likely to benefit from the growth of computing demand. The answer for investors comes down to what investments have the least amount of risk for misanalysis based on our expertise. If an investor has expertise in any of these areas, they’ll likely do well.

Put simply, “What companies am I confident will be important and growing in the data center in 5-10 years?”

As I see it, the semiconductor value chain is a great way for me to invest in semiconductors. I don’t see semis losing their relevance any time soon, and the foundries, equipment companies, and tooling vendors provide strong exposure to data centers with less risk of misanalysis due to their huge moats.

With that being said, I think it’s unlikely a portfolio of semi design companies with exposure to the data center (Nvidia, AMD, Intel, Broadcom, Marvell, etc) will underperform the markets over a long period.

As always, thanks for reading and feel free to reach out on Twitter/X, LinkedIn, or at eric.flaningam@publiccomps.com.

Disclaimer: The information contained in this article is not investment advice and should not be used as such. Investors should do their own due diligence before investing in any securities discussed in this article. While I strive for accuracy, I can’t guarantee the accuracy or reliability of this information. This article is based on my opinions and should be considered as such, not a point of fact. I’m a Microsoft employee, all information contained within is public information or my own opinion. The views contained within don't represent the views of any current or former employers.